This post is a true story of how I migrated a system for our customer from a legacy Mobile PaaS to AWS.

Brief of the project

- Duration: Jun-2020 - Aug-2022 (2 years 2 months).

- Scope of work: System design & implementation, backend software development, data migration, operation and after release service.

- Spent effort: ~150MM.

- Number of end-user: ~2 millions at cut-off time.

- Number of regions: 5 (EU, Japan, Singapore, China, India).

Beginning

On November 2019, I had a change to join an assessment phase for a customer in Kyoto. Our customer is a big health care device manufacturer in Japan and their products are being used widely over the world. Our customer has a mobile application (both iOS and Android) to connect with health care device via blue-tooth to get data, make setting and synchronize data with backend server.

The back-end server was deployed in Kii Cloud - an IoT and mobile backend platform (PaaS). Due to the growth of end user, the system had some problems that impact their business:

1) Running cost: Kii Cloud pricing model base on number of monthly active user (MAU), however as our Customer explanation, pricing for number of user is not Pay-as-you-go, we need to buy as package example 0-100k, 100k-500k, 1millions - 2 millions... At the time of assessment phase, number of MAU is ~900k for total 5 regions (US, EU, Japan, Singapore, China), so they need to prepared for next pricing package.

2) Customizable: Kii Cloud is a platform as a service, pros of using PaaS is that you just need to focus on your business logic, any basic implementation like authentication/authorization, data management, data synchronization between client-server is fully support by the platform. However, if you need to customize some special logic, every thing become complicated. Kii Cloud allow you to write custom code in a file call server-code, at the time I start to investigate, there were 14,000 line of code in a single Java Script file with hundreds of methods.

3) Performance: Kii Cloud is based on NoSQL which mean that it not designed for OLAP. The process of data aggregation, reporting, analytic take too much time to complete because we need to read all data instead of using query like SQL. For example, a simple batch job that calculate number of active device by model took ~6 hours to complete.

4) Vendor locking: Our customer had a technical support team from Kii Cloud to help them to implement custom business logic, system trouble shooting and operation. They also help our customer to implement some custom module (which implemented dedicated for our Customer because they are big client). However, the more custom module is implemented, the more customer is lock to the platform provider and more operation cost are needed.

Challenge

To resolve above problems, our customer plan to migrate their system to new cloud service and AWS is the first candidate because they were familiar.

However, before they can decide to move, below challenge need to find a solution first.

1) How to migrate user profile to new system and allow them to re-login with their old password?

2) How to migrate/sync data between old ->new system in near-real-time to reduce system down time when cut-off?

3) How to ensure that all the client application and connecting system will continue to work well after switch to new system?

4) How to cut down running cost and operation cost on new system?

5) How to increase performance, improve user experience, reduce back-end job execution time when migrate to new system?

Solution

1. Overview of technologies consideration and selection

- Programming language: we choose Java for main reason that our company developer are familiar with it and easy to find member for a big project.

- User management service/framework: Cognito is the first candidate because it is fully managed by AWS, pricing is reasonable per MAU. For China regions, we using self development module based on Keycloak framework.

- Database management service: We have considered between two type of database: NoSQL and SQL. After investigate the complicated of data, we saw that MySQL with JSON data type can allow client to send data without predefine structure while data aggregation and statistic task can be easily to perform by using query.

- Email service: Simple Email Service (SES) was the best choice to reduce implementation effort.

- Backend deployment method: Container vs Lambda are two candidates, however as mentioned above, Java require more resource and load time also need to be concern so Container model is be better.

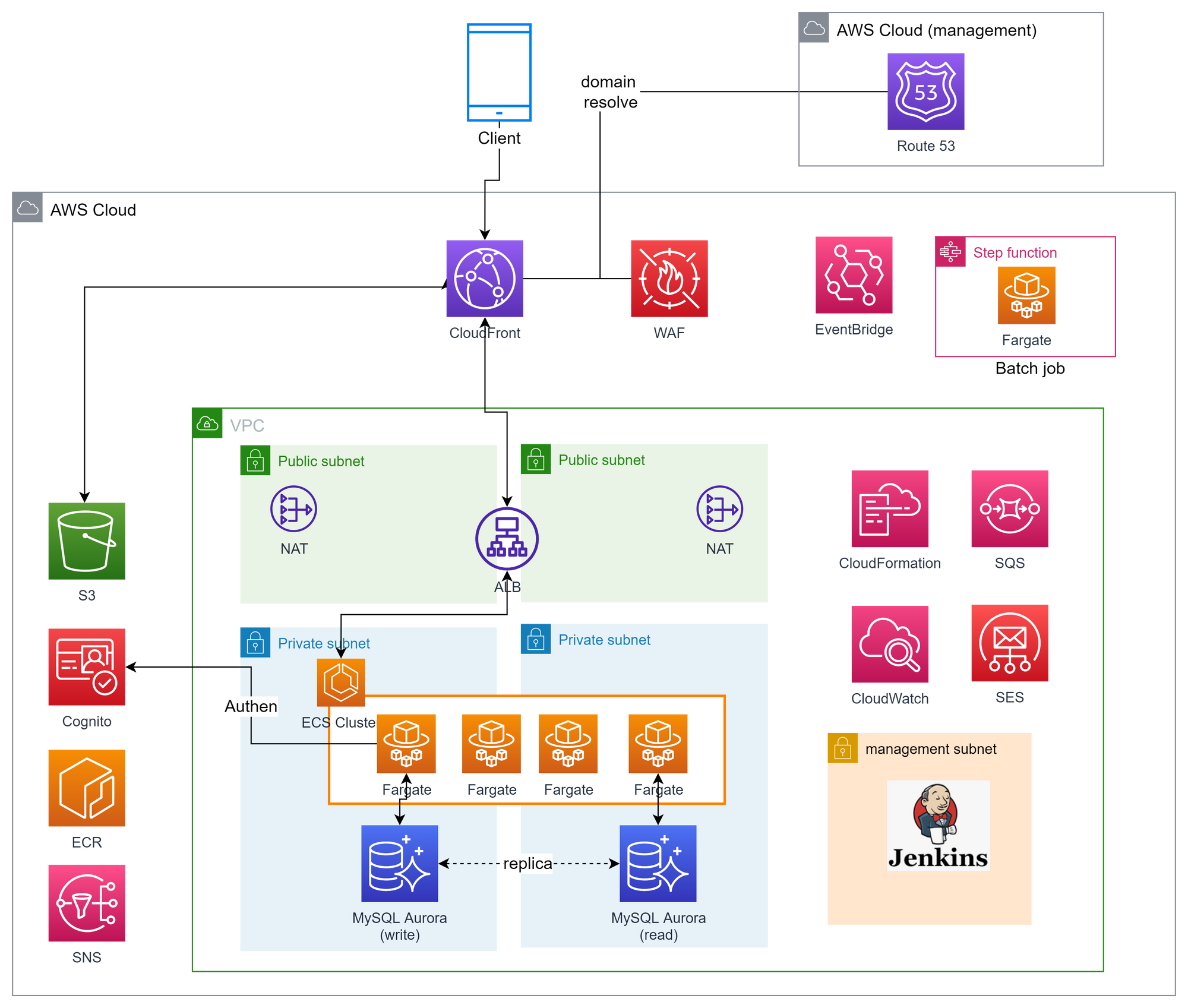

Final system architect was looks like below:

2. User profile migration

To allow user login to new system after migration completed using their old password, there was two method that has been considered:

-Method 1: After system switching, when user login to new system for the first time, make a request to old Kii Cloud to verify the password. If password that user inputted is correct, let them in and force update password to new system (Cotnito).

This method has a risk that when users login at the first time after system switching, a huge amount of request will be sent to Kii Cloud can cause impact to their system.

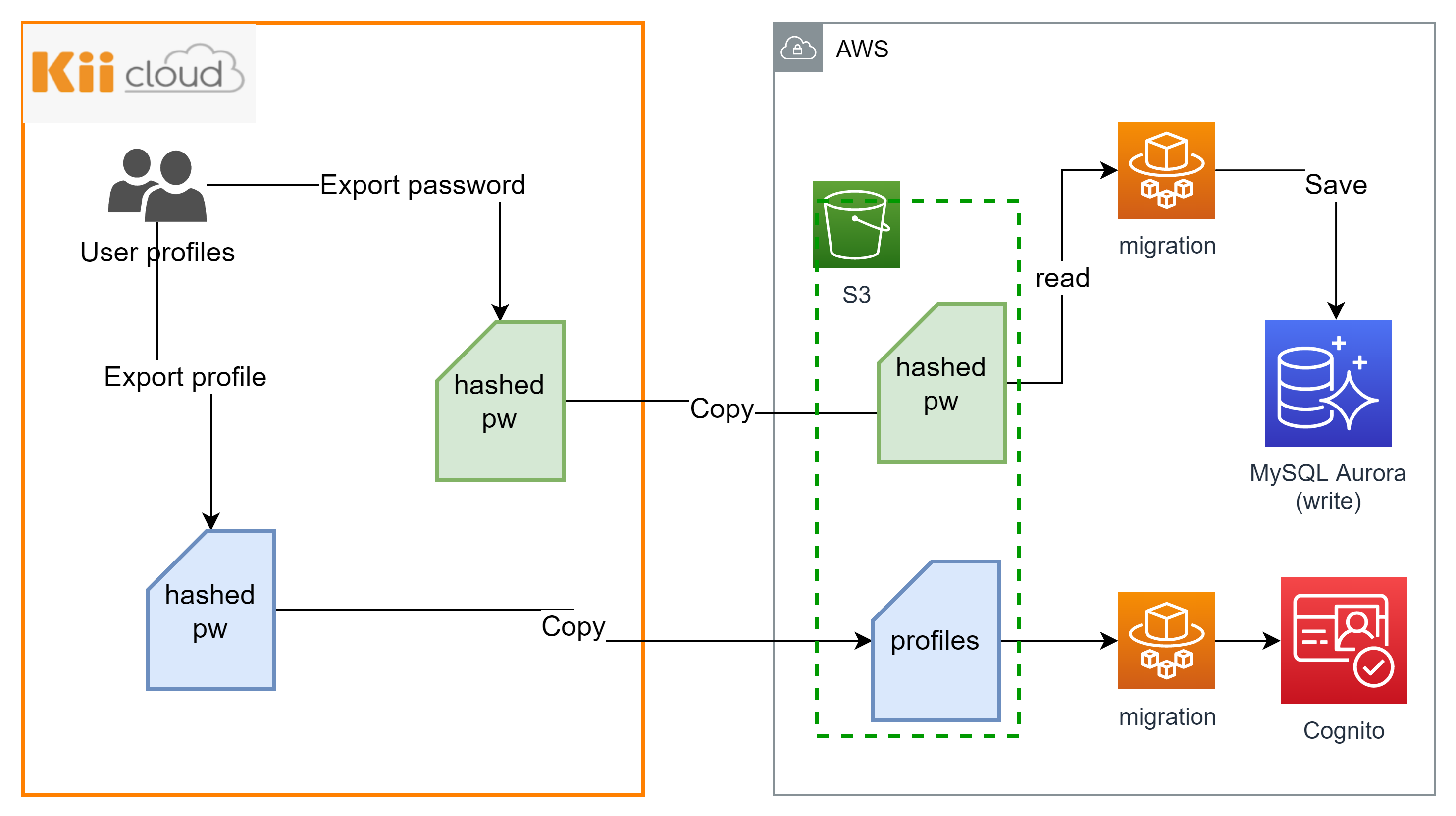

-Method 2: Request Kii Cloud support team to export all password (or hashed password) of users then create a profile with the password in new system. Fortunately, after many discussion, they agree to send us hashed password and hash-key in two time: first time when migration start and second time when connection from client is stopped.

The progress of user profile and password migration was separate into two step

- Step 1: Migrate user profile and password to new system (before system switching).

User profile will be created on Cognito (without password).

Old password will be save in MySQL Database as separate field (ex: old_pass_word)

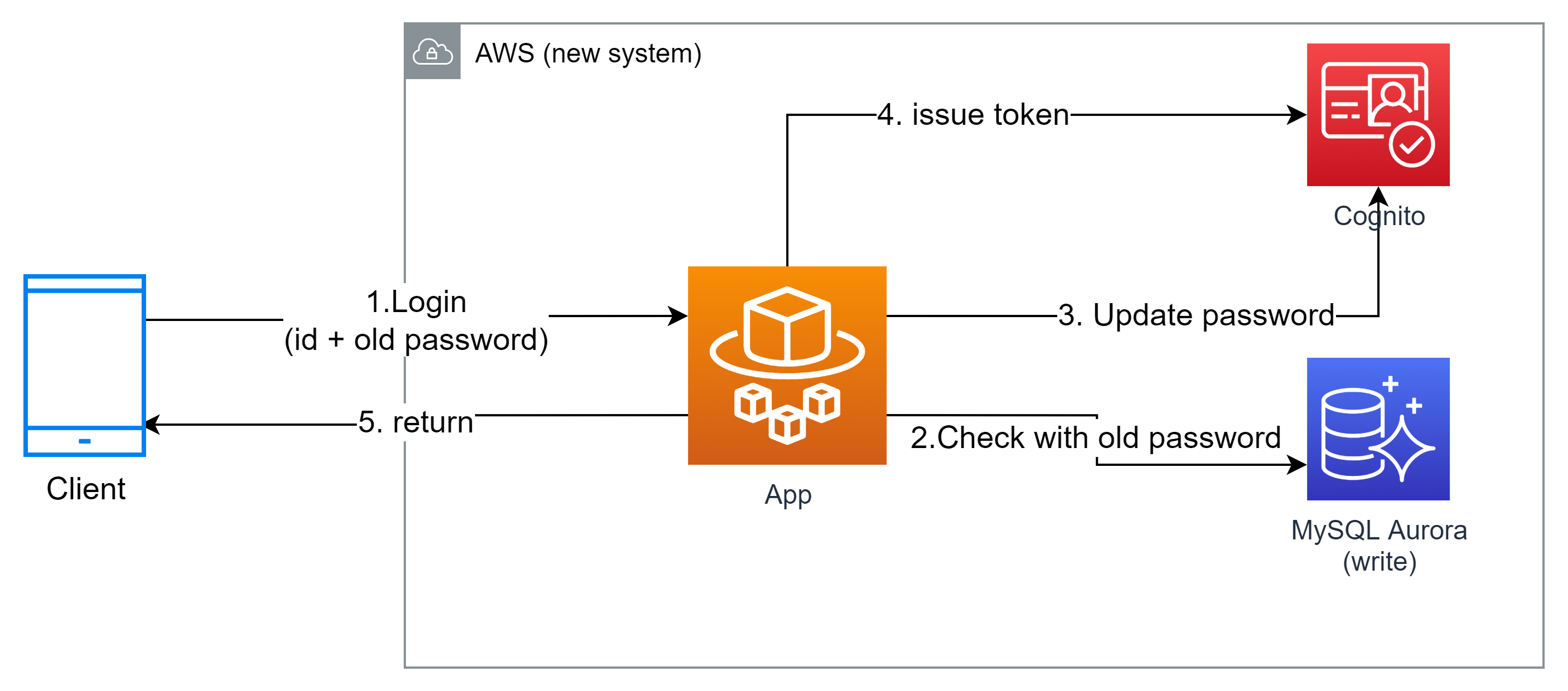

- Step 2: After user successfully login to new system, activate the user profile.

1. User request to login with id and old password (Only user who remember password, if user forgot their password, they must request to reset password via email).

2. Backend service check for password using hashed key that provided by Kii Cloud. If match, continue to step 3.

3. Update password to user profile on Cognito using admin force change password API.

4. Issue token with new password.

5. Return result to client.

After first time login successfully with new system, user's old password will be removed and from second time, login request will be sent to Cognito.

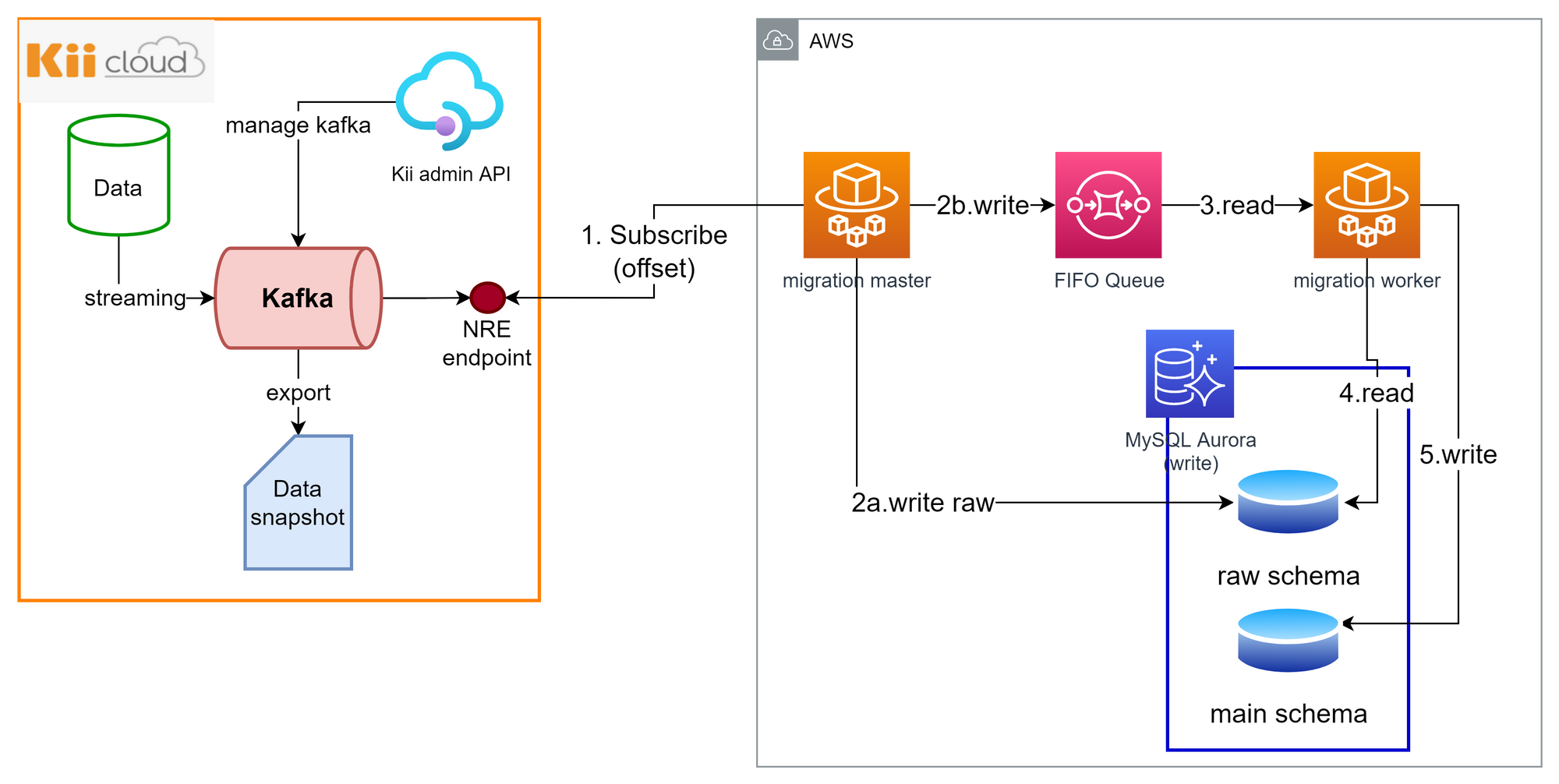

3. Data migration and near-real-time synchronization

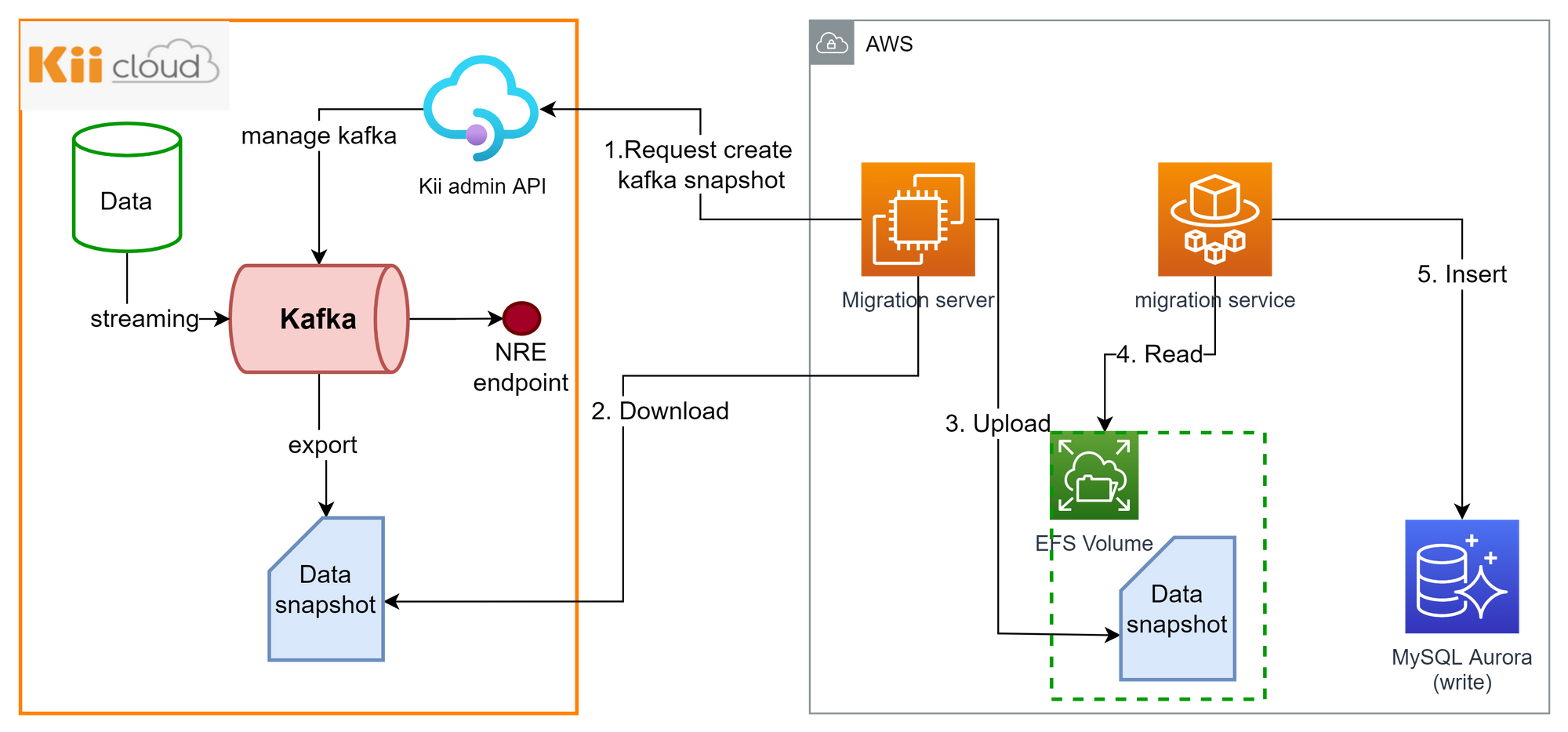

Kii Cloud support a feature that allow administrator to export data of all user as a Kafka stream. After data has been loaded into Kafka stream, we can export as static file (which contain sequence data) or subscribe to consume the data in near-real-time mode.

1. Migration server will make a request to admin API that request Kafka streaming to take snapshot of current data.

2. After Kafka finished create data snapshot, an number called [Offset number] also be returned to client for near real time consumer. Migration server will download snapshot file then save to EFS volume.

3. Migration service read snapshot data in sequence.

5. Migration service transform data then save to MySQL database.

After first time data migration is finished, near-real-time data synchronization between old-new system will be performed.

1. [Migration master] service subscribe to Kafka endpoint as a consumer, when subscribe, specify [offset number] get from above step. Any time new data is inserted/updated/deleted on Kii Cloud, those change will be transferred to [migration master] service.

2a. [Migration master] service write raw data in to database.

2b. [Migration master] service send a message to SQS. We used FIFO queue to ensure the order of data.

3. [Migration worker] service read data from SQS queue to get data need to be processed.

4. [Migration worker] service read data from raw schema.

5. [Migration worker] service transform data then write to processed schema.

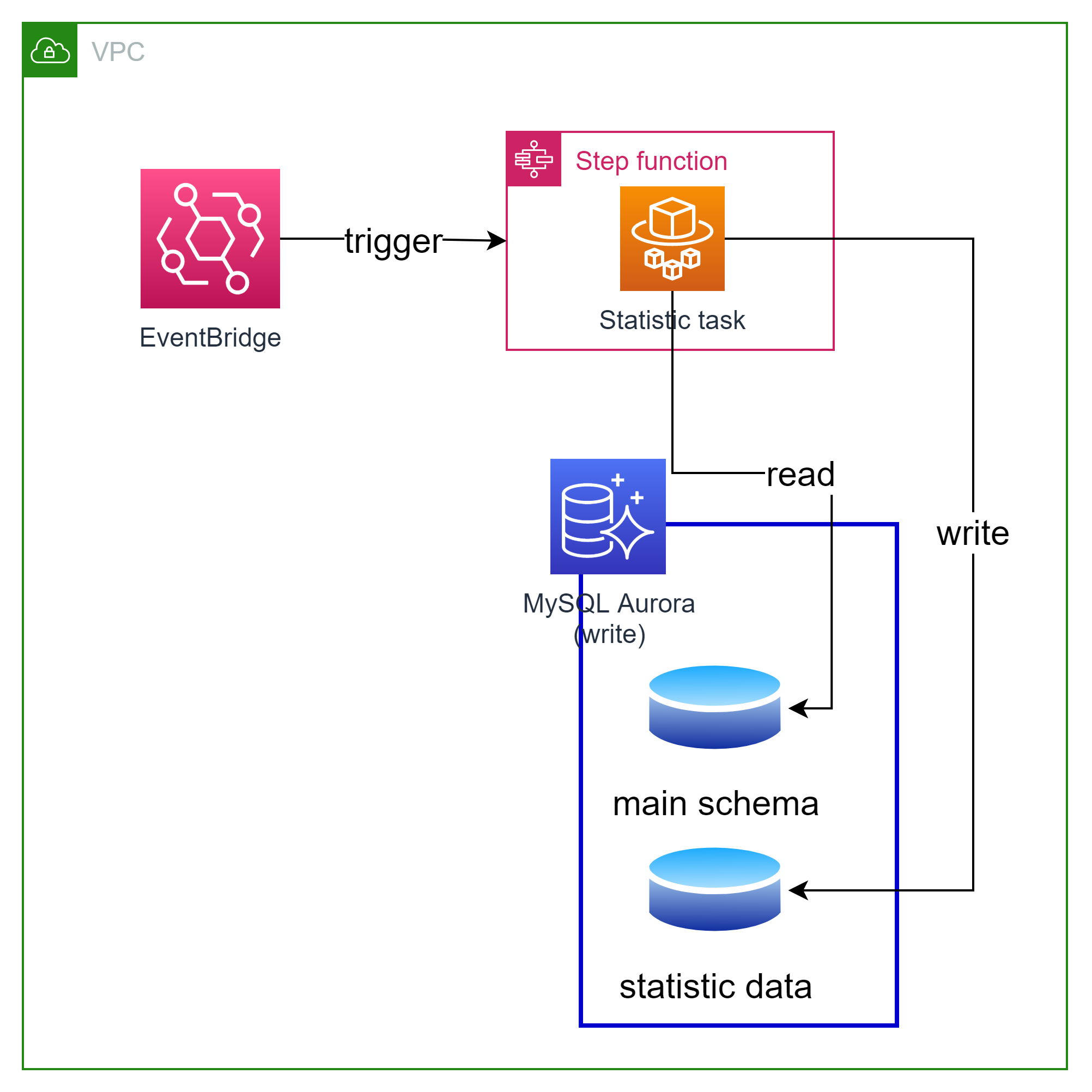

4. Performance improvement for batch job

Statistic batch job has been re-write using MySQL. Only business logic of old system was kept. The scheduler for job is managed by Event Bridge while execution status is monitored by Step Function

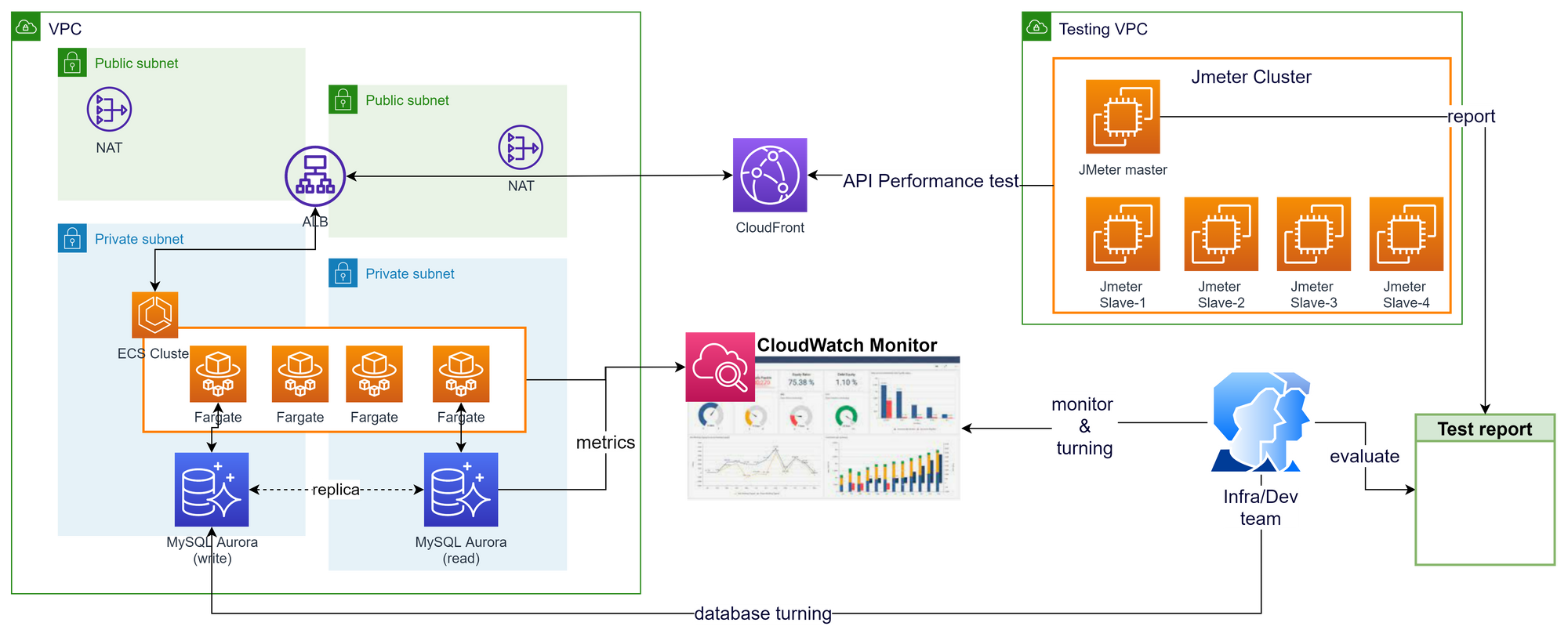

5. API performance testing and turning.

At the beginning of implementation phase, all backend components (ECS Cluster, RDS Database) has been designed and set up for monitoring.

Performance testing was performed using JMeter cluster with have 1 master and 4 slave servers. Auto Scaling is applied to ECS Cluster based on CPU and Memory utilization. Infra and Dev team work together to turning instance size and database indexing to meet customer expected workload.

Result after migrated to AWS

- Performance: API response time has been improved ~2 times faster than old system. Batch job for statistic also reduced execution time from 6 hours to 10 minutes.

- Running cost: Approximate 70% running cost has been cut down. Using Auto Scaling feature help to reduce infra running cost in low workload time.

- Operation cost: After migrated to AWS, system operation has been assigned to Vietnamese team so that customer has reduced 60% effort of operation.

- Vendor locking and feature customizable: After convert to Java based containerize application architect, development team can easily to add or modify feature as customer requirement. No more support needed from provider because we only use open source framework.

Lesson Learn

During the project implementation, we got some troubles and make some mistakes that should become lessons learn.

Backend logic migration to new language

Instead of convert one-by-one the programming logic of the old code, we read SRS document and functional specification provided by customer. Because the document is not up-to-date with latest source code and misunderstand (document written in Japanese), many logic has been convert to new language wrong. The solution is when migration from a language to other language should follow the logic of latest source code rather than the document.

Change management and version control

At the switching time for Singapore region release, when data migration and confirmation has been completed on the previous day night, after open the connection for client can connect from Mobile App, we got a big problem that Database CPU Utilization reach 99% and response time was very slow.

First, we guest that database specification was smaller than needed, so we decided to size-up it by double. But after database modification was completed, nothing change. It's CPU still ~99% and

Deep dive into trouble shooting, we saw some log said that there were some SQL Query treated as "SLOW QUERY" it mean that it took too long to complete. It so weird because we has perform performance test with workload is 4 times more than the Singapore Region.

However, the "SLOW QERY" log shown that an important index is missing from database. After compare with Database Design document, the conclusion is that indexing really missing (for some reason).

As a hot fix, we add missing index(s) manually to resolve the database high workload, and like a magic, It work!

After the migration progress for Singapore Region has been finished, we investigated and found that the indexing after performance test phase finished has not been reflect to JPA entity model. So we go-live the production environment with source code that not contain needed change.

Data validation after migration progress finished

For migration project, not only you migrate user data but also you need to prove that there is no problem during migration progress. Customer will need a set of report, evident like log file, failed data to make sure that every thing has been done well before they approve for system switching.

At the beginning of the project, we had not think about how to verify data after migration complete, what tool need to be develop and what information must be included in final report.

After migration complete (on testing environment), the customer required us to provide a detail report for each type of data, for failed data that could not be migrated, they must be divided in to pattern and make sure only invalid data was failed to migrated.

Development team had to spent many effort on create a tool to check data on new system, compare them with old system then give the report. If the data validation has been planned at the beginning, we should be able to planed well.

Conclusion

System re-build and data migration is one of the most complicated project type.

However if all the aspect of feasibility are evaluated enough along with detailed plan and well software process, we can finished it with customer satisfaction.

*In this article, I only focus on data migration and system architect. Other aspect like project management, cost estimation, application development, stake-holder control,... will be describe in other article.

Thanks you and see you soon!